Books - Read and Enjoyed

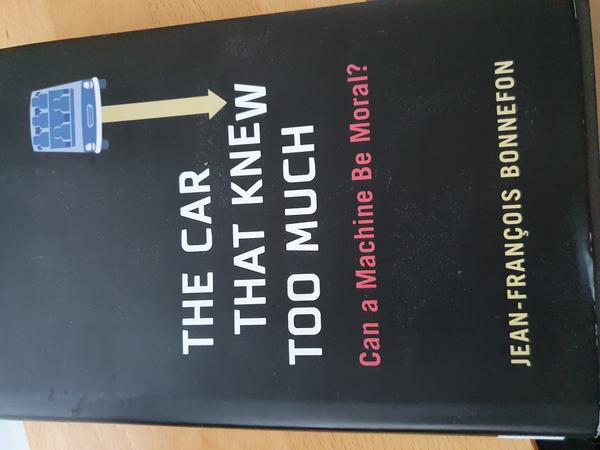

The Car That Knew Too Much

Can a Machine be moral?

Jean-François Bonnefon

MIT Press, 2021

When I was in high school I was fascinated by many questions of philosophy, like what is the essence of human beings, what is the basis of all physical laws or what is the origin of the universe. But I soon came to conclude that, while endlessly fascinating, the practical value of these questions and the attempts to understand possible answers is very limited. The tools of philosophy are the defining and refining of abstract concepts, probing their inherent consistency and gauging their value against observation in the real world. However, it turns out that for understanding the nature of the physical world the devices of the natural sciences are so much more effective. With mathematically framed theories and elaborate experiments physicists, chemists and biologists came so much further in understanding the laws of the physical world than philosophers could ever dream of. The scientific method, wherever it can be applied, holds much greater promise in gaining new insights and understanding than philosophy. Only at the fringes, I concluded, where science cannot be applied effectively yet, can philosophical pondering be useful in clarifying concepts and guiding scientific investigation into the right direction. But at the fringes of established knowledge, beyond the edges of solid, provable understanding, progress is glacier slow and new insights are far apart, exactly because there is no trustworthy knowledge secured by repeatable experiments and everything has to be questioned again, and again, and again.

Thus, for a long time I have been of the opinion that pondering questions of philosophy are entertaining, but do not lead anywhere near to specific conclusions that can be usefully applied somewhere. However, with the advent of true general artificial intelligence (GAI) this seems to be changing. If we want to steer the development of GAI in any meaningful way, we finally need to understand who we are, what we want, what our values are, and what is the basis of our decision making. If we want GAI to be aligned with human values, as Nick Bostrom, Max Tegmark, Toby Ord and many others propose we should, we first need to understand our values. If we aim at GAI that is compatible with humans, that can peacefully coexist and live together respectfully and productively, as for instance Stuart Russell argues we should, we need to understand the mechanisms of group interaction, joint opinion building, group decision making and how all that gives rise to the societies we live in. More than 2500 years after wise philosophers have formulated the ultimate quest at the entry of the Delphic Oracle as “Know thyself” we still do not know what our values are, how we make decisions, why our objective and goal structure is built as it is, and particularly pressing, how we function as societies. Our knowledge is still very anecdotal, complemented by some solid but rare scientific understanding of individual phenomena but lacking an overarching frame of explanation.

The development of GAI will force us to focus on these questions and it will also give as the means to study them, because for the first time in our history we will be able to observe the behavior of truly intelligent agents that are completely unlike us. We will be able to do experiments to study for instance how agents with different goal structures and decision making processes give rise to different group dynamics and emergent system behavior.

The book “The Car That Knew Too Much” is a harbinger of such studies by focusing on the moral questions that autonomous driving will pose. It assumes that autonomous cars will not be perfect and will still be involved in accidents that harm humans. Even though the number of accidents and people killed may be greatly reduced, autonomous cars will be involved in situations where an accident cannot be avoided. However, the car may be able to influence the precise course of the accident and its outcome. In such a situation the car may be able to decide if it runs over a child or an elderly, a pedestrian or a cyclist, if it minimizes the risk to its passengers or to others on the road. Hence, the car, or rather the designers of the decision making algorithm of the car, have to decide how to answer these moral questions.

Jean-Françise Bonnefon and his collaborators have studied the preferences of humans to these questions. On an internet website they have asked millions of people about their preferences in moral dilemmas that an autonomous car may be confronted with. I recommend that you take a look at their website and answer a couple of the moral dilemma questions: https://www.moralmachine.net/

While the scope of this study is limited, the book gives a vivid account of the kind of moral questions, that we have to confront with the emergence of autonomous driving. The examples on the Moral Machine website are simplified and feel unrealistic, but the dilemmas are real, although they will appear in very subtle ways. For instance, the car’s decision making algorithm will have to decide how much distance should it keep to cyclist, pedestrians, and other cars. This decision alone will have an impact on the statistics of accidents where cyclists, pedestrians and other cars are involved. It becomes an obvious dilemma if there is a trade-off between the distance to a cyclist and a pedestrian. While in any specific case, the chances are that no accident happens, statistically there will be accidents and the specifics of the decision algorithm will impact the statistics of injured and killed humans.

When machines become smart enough to be able to make decisions that involve trade-offs between different valued objectives, we have to decide these trade-offs. For doing that, we need to understand what we want, what our values are, and, even more importantly, what kind of societies emerge when we tune the trade-offs in a specific way. When GAI agents become reality, they will interact with us as moral subjects that decide moral trade-offs. The societies formed jointly by them and us will be profoundly shaped by the way the GAI agents decide moral questions. We need to understand what kind of societies will emerge and what kind of societies we prefer.

(AJ May 2022)