Books - Read and Enjoyed

The Idea of the Brain

The Past and Future of Neuroscience

Basic Books, New York, 2020

by Matthew Cobb

The Book consists of two parts. In the first part Matthew Cobb reviews the conception, theories and the gradually increasing understanding of the elements, the structure and the functionality of the brain. The second part presents the state of the art regarding Memory, connection networks in the brain, localisation of functions, the role of neuro-transmitters at synapses, and consciousness.

I

The historical overview of our understanding of how mind and body are connected starts with the ancient civilizations, which, on all continents, held predominantly the view that the heart is the host of the mind. It was in ancient Greece where the idea that functions of the mind are localized in the brain was put forward with detail and insight. First in the 5th century BC, Alcmaeon from Croton, a Greek town in the foot of Italy, formed the conviction that the senses and the intelligence is located in the brain. Then, around 400 BC on the island of Kos in the school of Hippocrates this concept was further elaborated and detailed in a document with the title On the Sacred Disease, where it reads

It ought to be generally known that the source of our pleasure,

merriment, laughter, and amusement, as of our grief, pain,

anxiety, and tears, is none other than the brain. It is specially

the organ which enables us to think, see, and hear, and to

distinguish the ugly and the beautiful, the bad and the good,

pleasant and unpleasant ... It is the brain too which is the

seat of madness and delirium, of the fears and frights which assail

us, often by night, but sometimes even by day, it is there where

lies the cause of insomnia and sleep-walking, of thoughts that

will not come, forgotten duties, and eccentricities.

The members of the school of Kos made anatomical studies and developed the incorrect theory that flowing air is a key agent in the functioning of the brain.

Later in Alexandria a more detailed understanding and more insight in the anatomy of the brain was developed by Erophilus and Erasistratus, among others. 400 years later, around 200 CE, a major advance was made by Galen in Rome, who made very detailed anatomical studies and experiments and advanced a number of theories on how the brain functions. Galen’s work was very voluminous (170 treatise survived) and influential.

However, in Ancient Greece and Rome the importance of the brain was not undisputed, and in particular the most influential ancient Greek author Aristotle was opposed. He wrote in Parts of Animals:

And of course, the brain is not responsible for any of the

sensations at all. The correct view [is] that the seat and source

of sensation is the region of the heart ... the motions of

pleasure and pain, and generally all sensation plainly have their

source in the heart.

This dispute was only settled in the 17th century after many more studies, experiments, and theories. At Shakespeare’s time neither view was still unanimously accepted, as summed up in the Merchant of Venice:

Tell me where the fancy bred,

Or in the heart or in the head?

Progress was made rapidly in the centuries that followed and while the disagreement about the location of mental activities has been settled, it has been replaced by the question whether functions of mind, in particular consciousness, can be explained solely based on physics laws of matter and forces, or if they originate from some meta-physical source.

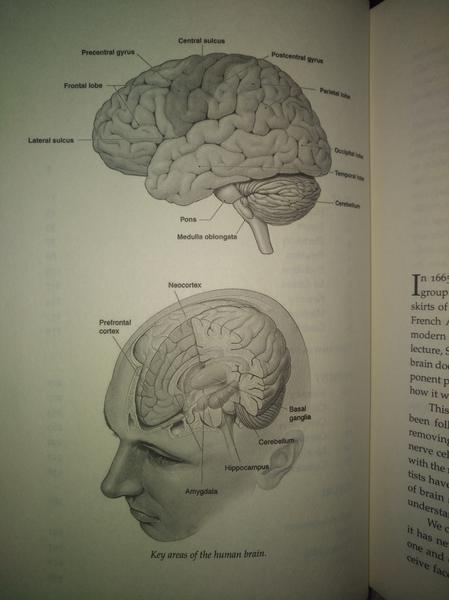

The brain is a complex organ with no discernible design principles and no obvious localization of functions. All functions of interest like vision, audition, motor control, language, memory, planning, abstraction, and consciousness, are the result of the intricate interplay of many different regions and modules, which makes experiments hard to devise, their outcomes ambiguous, localization always only partially successful, and progress generally slow because all gained insights were inevitably limited and based on specific assumptions and experimental context.

Moreover, it took a long time until the basic elements of the brain were understood. Only in the late 19th and early 20th century it became clear that the neuron cells are the building blocks by transmitting electric impulses that could be considered as information. Although in hindsight it seems obvious that the brain is an information processing organ, this insight could not develop before the concept of information emerged. In the first half of the 20th century the conceptual and mathematical foundations for information theory, control theory, and computing were developed, and only in parallel to these advances could the brain be gradually understood as a computer. After it was hypothesized that the neurons and networks of neurons perform information processing, the question of the information encoding arose, which is not completely resolved even today. It seems that neurons use the rate of firing to encode more or less of something. A higher frequency of firing means more of the something that the neuron represents, and we know of neurons that represent colors, movement and edges and even specific objects or persons. But how exactly the concept of a house as sheltering objects for humans is represented and how entering and leaving the house is stored in episodic memory seems still to be a mystery.

II

The second part reviews the state of knowledge today. In particular it presents our understanding of memory, structural features, the effects of certain chemicals on coarse grain behavior, localization of functions, and consciousness.

At the neuron level memory is built by strengthening connections between neurons if the are active together (what fires together, wires together). Short term memory is realized by quickly effected changes in the connection between two neurons, essentially improving signal transmission across synapses. Longer term memory effects are realized by building more synapses, by establishing new connections between neurons and by growing new neurons. The location of long term memory seems to be broadly distributed in the entire brain but the hippocampus plays a crucial role in the formation of new memory, because when it is damaged or removed the formation of new memories is greatly reduced or becomes altogether impossible.

Neurons form neural networks to realize brain functions. The main guiding analogy comes from computer science where artificial neural networks have emerged as a celebrated and highly successful method in machine learning, image processing, robotics, language processing, and countless other problem domains. In those artificial neural networks input neurons are presented with inputs (e.g. the pixels of an image), they react by firing, or not firing, which serves as input to a next, inner or hidden, layer of neurons in the network. After a set of hidden layers are activated in sequence an output layer of neurons produce the result of the computation, e.g. the identification and location of a cat, a car or cyclist in the image.

While this analogy is widely considered plausible it seems hard to pin down specific neural networks in the brain and associate them with specific functions. In the visual processing pipeline this has been partially successful. Early layers of neurons close to the retina identify brightness and colors while layers behind are sensible to edges in different orientation, geometric shapes and motion. But progress is slow because of the diversity of cells and connection schemes, the unconstrained distribution of functions and the utter absence of modular design principles.

The lack of modularity is also an obstacle to localize specific functions in the brain. While the brain is structured and specialized there is no simple mapping of function to module anywhere in the brain. We know that the hippocampus facilitates the formation of long term memory and is a necessary contributor, but it is by no means the only module involved, and the storage of memory content is certainly widely distributed. We know that Broca’s area is essential for spoken language generation, but again it is not the only region involved and a range of other parts contribute to language generation, processing, and understanding. We have identified individual cells that only react to images or the name of former president Bill Clinton, but it is certainly not the only cell with this feature and we do not really know, what role that cell plays as part of the information processing network.

The last chapter in part two of the book is devoted to consciousness, which is arguably the most perplexing phenomena we know of. Philosophers have struggled with it for millennia and it is not clear how much progress we have really made. The problem is usually phrased as the question whether consciousness emerges from the laws of physics (the materialist view) or if some meta-physical influence is required to explain it (the dualist view). Throughout the millennia of human intellectual history we find great minds in either camp (or in their variations or combinations). But it was surprising for me to learn that this debate is still not settled and that great contemporary scientists also promote dualistic theories, such as Charles Sherrington (Nobel prize 1932), Roger Penrose (Nobel prize 2020), and John Eccles (Nobel prize 1963). Christoph Koch, one of the most visible neural scientists activily searching for the neural correlate of consciousness, has proposed that integrated information theory (the theory that Giulio Tononi and himself have developed to understand and explain consciousness) has telelogical implications and he suggested that there is some kind of urge in matter to become conscious, referencing the Jesuit mystic Tailhard de Chardin.

III

While this book’s chapter on consciousness indicates, that confusion still reigns supreme in this matter, my view is that we are much closer to understanding it. I agree it is still mysterious how consciousness emerges in the human brain, but there is a contender theory that conceptually comes very close to explaining it, which is only mentioned in passing by Matthew Cobb. Bernhard Baars has proposed the global workspace theory (GWS) in the 1990s and more recently it has been further developed by Stanislas Dehaene and others. The GWS theory considers consciousness as en emergent property of the global activity in the brain that essentially solves a resource allocation and attention problem. While there are many specialized modules in the brain that solve specific tasks such as image processing, object identification, sound processing, language generation, etc. the brain needs a global, centralized arbiter that decides on which problem to focus and what action to take. Should you focus on the ant, the leave, the tree, or the tiger which are all identified in the visual field? The GWS theory compares consciousness as the global theater of the brain, where all those specialized modules contend for presence, but only one, or a coalition of modules is allowed to enter that stage. Once on the stage the winning ensemble of modules takes control of attention and of the content of consciousness. All other modules are suppressed and pushed back into the realm of the sub-conscious. It employs a winner-takes-all method, a principle which seems to be common in neural networks, natural and artificial. When one ensemble wins and enters the stage of consciousness, it commands many resources in the brain and focuses them on the one, most urgent problem to solve.

GWS is a mature theory developed over 20 years and many of its predictions are in line with observations and experiments. Although still work in progress, it is certainly a real mechanism operating in the brain solving an important problem. Still, many questions are unanswered and some problems remain mysterious. In particular, what puzzles most is how to square the subjective experience of consciousness with any such algorithmic process. How could a global workspace, or any process in the brain, give rise to the subjective experience that we are all so familiar with day in day out?

Part of the difficulty stems from the fact that consciousness is a subjective experience that we perceive from the inside of our thinking machine. In his 1974 paper “What it is like to be a bat” Thomas Nagel elaborated on this difficulty and argues that consciousness cannot be fully explained if its subjective character is ignored.

Although I do not see either how to reconcile these two perspectives, we may shed new light on it by imagining for a moment a computer embedded in a robotic body. Ĺet’s call it an agent. Our agent receives streams of data from several sensors. For the sake of illustration we assume there are two cameras, two microphones and several accelerometers that help the agent to track its position, attitude and velocity relative to the environment. The one central activity of the agent, that never ceases and to which everything else is subordinate, is to update a model of itself. The sole purpose of this model is to reflect the agent’s relative position to and interaction with its environment. All properties of the model only serve to accurately reflect, and predict, interactions with the environment. All data streaming in from the sensors are analyzed to keep track of changes of position, velocity and attitude relative to the environment.

In addition to sensors our agent also has actuators to steer a few motors, which allow the agent to move wheels, arms, etc. The agent’s inner model also covers and reflects the effects of actuator commands on its relative position in the environment. Thus the model, if sufficiently accurate, predicts correctly how the relative position would change as a result of a motor command, and, more generally, how the interaction with the environment would change as a result of the agent’s actions.

Hence, our agent has sensors to observe and monitor the environment and actuators to interact with and manipulate it. And it uses these capabilities to update its inner model in order to reflect its interaction with the environment as accurately as possible, and also to predict future trajectories of this interaction. In a way, the main purpose of our agent is to continuously improve the accuracy of these predictions. Whatever it does, its actions should facilitate improved powers of prediction.

If our agent is equipped with mechanisms that facilitate continuous learning, with sufficient computing resources it will develop rather sophisticated models of its own body and of the objects in its environment. If the environment contains only simple physical objects like stones, balls, rocks, liquids, etc. the agent will gradually develop a naive model of physics laws that allow for predicting how objects move, which objects smash other objects, which objects bounce off, how liquids flow, etc. If the environment contains objects that have an agency of their own, like other agents, our agents will gradually build models of their behavior as well. Gradually, our agent will understand causal relationships because it makes for simpler models. When it sees a stone falling on a bottle of glass, it will conclude that the action of the stone smashed the glass bottle. And when it observes another agent grabbing a stone and throwing it onto a glass bottle, it will start asking why that agent wanted to smash the bottle. Understanding causal effects and dependencies greatly improves the predictive powers.

Let’s make the world of our agent a little bit more complex still. Let’s assume our agent is not only interested in physical relations with its environment but also in “social” relations. In this example we mean with social relations that our agent groups other agents into those that it likes and others that it does not like, and, above all, it wants to be liked by other agents. For now we gloss over the many aspects and complications of social relations and we only consider it as a relatively straight forward generalization of physical relations. Our agent’s relation with other physical objects is governed by laws of physics and geometry, and its relation with other agents is governed by laws of social interactions such as “I like those more that like me” and “I feel more worthy when other agents appreciate what I do”, etc.

Because of the complexity of its relations with the outside world the inner model has become quite complex and the agent spends huge effort and computational resources in maintaining, updating and improving the inner model of itself. Although it has, as a side effect, also built sophisticated models of all the environmental objects and subjects, it is anyway mostly concerned and preoccupied with its own inner model of itself. And this model is solely determined by its many interactions with the outside world.

Let’s try to imagine how it feels like to be this agent. Continuously, new information about the environment is pouring in, but all that is interpreted exclusively in the light of its impact on the relation and interaction of the agent with the environment. Most of the time our agent’s computational activities circle around improving its own inner model, the models of other outside objects and agents, and how they all interact with each other. While continuously observing itself, the agent will not miss the fact that it, in addition to interact physically with the environment it also engages in computational activities, in data analysis, simulation and predictions of possible future events, and planning of actions. Again assuming the agent commands sufficient computational resources, the inner model of itself will also include this activity of model building and model improvement. Thus the agent’s model of itself reflects its physical interaction with environmental objects, its social interactions with other agents, and its engagement in self-model building. Since it has learned about the efficacy of the analysis of causal relations, it will wonder why it entertains a self-model, why it continuously improves it, and why it can observe itself in the first place.

We still do not know what it feels like to be this agent, but we can imagine that it is puzzled by what it observes. We can be sure that its perspective is very, very different from what we perceive but we note, that the content of its deliberations, “why do I haves a self-model?”, “why can I observe myself?”, “why can I observe and hypothesis about the environment?”, is similar to the contents of our own thoughts. How would we know? If we have built this agent based on conventional computer technology we certainly have every possibility to find out, since we could analyze its memory dump and we can monitor and trace each and every register and wire inside the agent.

Obviously we have not yet built such an agent, but there seems to be no fundamental reason, why we could not do it with today’s technology. The difficulty is not that we do not have sufficient transistors or that those transistors are not sufficiently fast. The challenge is most likely that we do not know, how to organize the learning algorithms, how design the models that the agent is building, maintaining and improving, how to organize the goal structure, etc. But these are all problems of algorithm development, not a lack of computing resources or performance.

Hence, assuming that we, in the not so distant future, build such an agent, and we find out that the contents of its deliberations are fairly similar to our own thoughts that relate to awareness and consciousness, what conclusions should we draw? Even though we still will not know how it feels to be this agent, it will be legitimate to conclude that the agent has computational self-consciousness.

IV

In summary, Matthew Cobb’s book is pleasant to read and inspiring. In particular I like the historical overview of our understanding of mind and brain. It brings out the fact that progress has not mean steady or straight, but rather it meandered through diverse and mostly incorrect and implausible ideas. Still, over the course of centuries we seem to approach an increasingly detailed understanding with ever increasing accuracy.

The review of the current state of the art is informative but to my taste it could have been more detailed and accurate. It emphasizes too much what we do not know at the expense of describing established and broadly accepted knowledge. Partly this is certainly due to the fact that this area is progressing at the high speed and any description of the state of affairs will be preliminary, incomplete and unsatisfactory for some. Thus, the book definitely wets the appetite for learning more about our mind and following the research on the ideas of the brain.

(AJ December 2020)