Books - Read and Enjoyed

The Precipice

Existential Risk and the Future of Humanity

Bloomsbury Publishing, 2020

Toby Ord

Usually, when reading a book, I do not have a clear image of the author, if I like the person behind the book or not, if I admire him or her, or not. Mostly I focus on the content of the book, its main messages, its story. With this book it was different from the first few pages. Right from the beginning Toby Ord made a distinctly likable expression. He comes across as a congenial person who deeply cares about individual people and humanity as a whole. The combination of a truly emphatic author with conceptual clarity of his writing, stringent and carefully weighed arguments, and eloquence in expression makes the book both touching and convincing. Several times I found myself thinking, I should delay reading now so I can enjoy the book longer.

The book discusses existential risks for humanity, what is at stake, and what could be done to avoid extinction. Since 1945 we are, for the first time in history, able to destroy ourselves but we still have not found ways, institutions and the social mechanisms to safeguard our survival. Once we have survived this most dangerous period in humanity’s history, we have a long and glorious future to look forward to. This future is only limited by the basic laws of physics and the structure of the expanding universe. This scary period, named the precipice, will last from 1945 until we have reached a safe harbor by putting in place a machinery that prevents self-destruction.

The book discusses the natural and the anthropogenic risks and then quantifies each of them to put them into perspectives. The discussion of each of the risks is well informed and the risk assessment is sensible. Obviously, there are many uncertainties around such a quantification, but arguments and numbers are thoroughly considered; at the end it is difficult to disagree with the order of magnitude proposed by Ord.

Here is the list of risks and their probability to wipe out humanities potential in the next century:

| Existential catastrophe | Chance within next 100 years |

|---|---|

| Asteroid or comet impact | ~ 1 / 106 |

| Supervolcanic erruption | ~ 1 / 104 |

| Stellar explosion | ~ 1 / 109 |

| Total natural risk | ~ 1 / 104 |

| Nuclear war | ~ 1 / 103 |

| Climate change | ~ 1 / 103 |

| Other environmental damage | ~ 1 / 103 |

| Naturally arising pandemics | ~ 1 / 104 |

| Engineered pandemics | ~ 1 / 30 |

| Unaligned artificial intelligence | ~ 1 / 10 |

| Unforeseen anthropgenic risks | ~ 1 / 30 |

| Other anthropogenic risks | ~ 1 / 50 |

| Total anthropogenic risk | ~ 1 / 6 |

| Total existential risk | ~ 1 / 6 |

In a nutshell, it seems we are playing Russian roulette. One round is in the revolver, we spin the cylinder, point the gun against ourselves and pull the trigger. This does not sound very sensible to do, but it is probably a good illustration of humanities current situation, which we are mostly unaware of.

Interestingly, Toby Ord considers unaligned artificial intelligence as most dangerous, and argues:

Very roughly, my approach is to start with the overall view of the expert community that there is something like a one in two chance that AI agents capable of outperforming humans in almost every task will be developed in the coming century. And conditional on that happening, we should not be shocked if these agents that outperform us across the board were to inherit our future. Especially if when looking into the details, we see great challenges in aligning these agents with our values.

(The Precipice, page 168)

Having laid out the severity of the risk landscape, Ord gives recommendations what to do. These recommendations are directed to political leaders and those who have a say in the big decisions in societies and nations, but also to the arbitrary reader. To this end he has co-founded a few organizations and provides these websites for further reading and guidance:

| Effective altruism | effectivealtruism.org |

| Choose your career | 80000hours.org |

| Donations | givingwhatwecan.org |

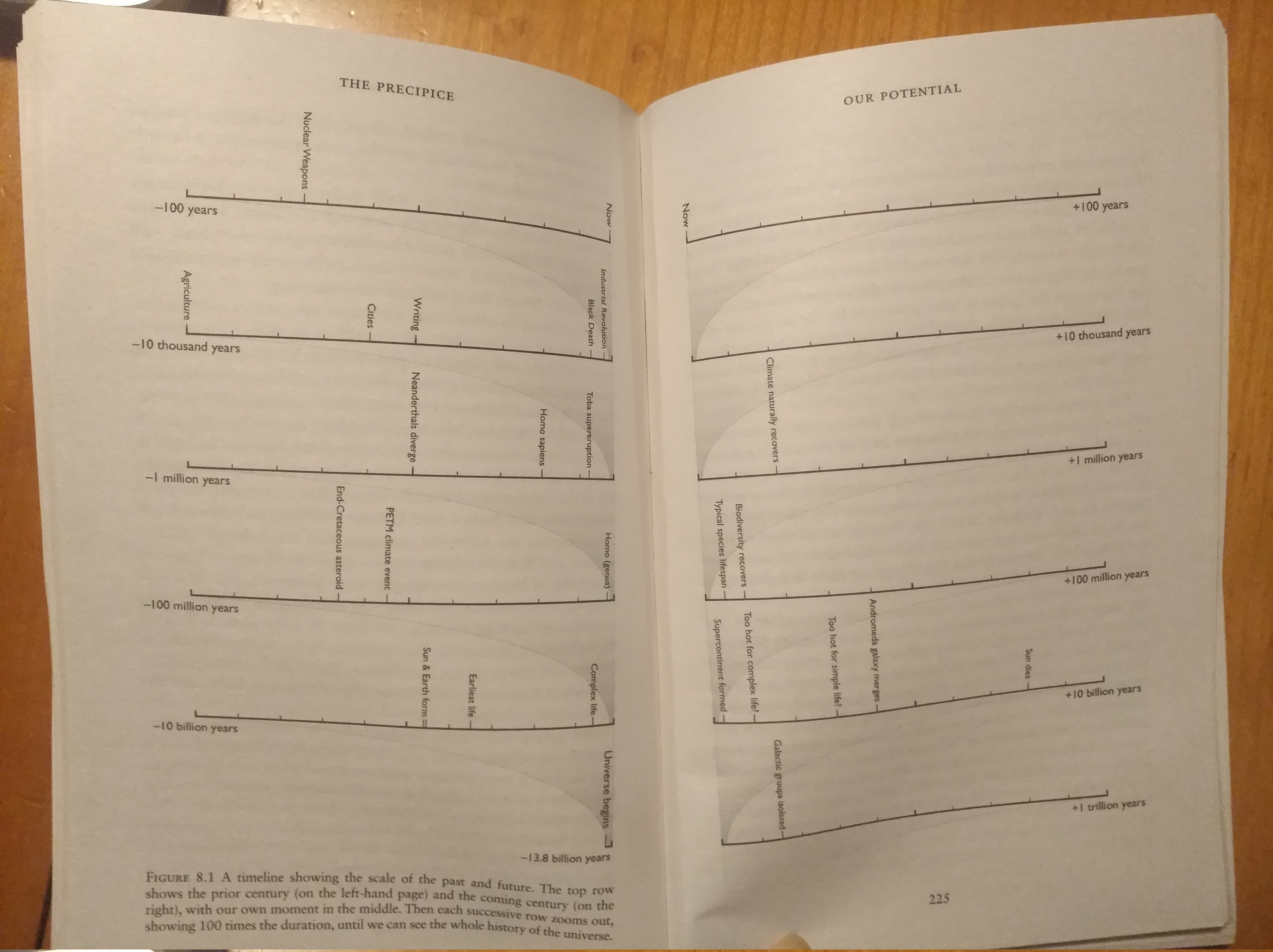

A fascinating part of the book and of the argument about how much is at stake derives from what is humanities future potential. The last chapter is devoted to this question.

I find it amazing that with respect to time and space the potential is vast and the limits far away. Regarding time, we have a lot. Our sun will provide favorable conditions on earth for another billion years, and, if we manage to protect earth from the excess radiation of a gradually warming sun, we have a few more billion years to live comfortably on our planet earth. But we are certainly not confined to our solar system, just as little as the Polynesians where confined to the Asian continent when they spread out over the vastness of the Pacific ocean between 5000 and 3000 years ago. It is hard to imagine that, given we have a few more thousand or million years time, we would not travel to and colonize our nearest stars a few light years away. Having done that successful, our successors could reach the limits of our home galaxy within 10-100 million years. Given sufficient time, a steady technological progress and a continuous drive and curiosity, not even the vast emptiness between galaxies may constitute ultimate limits, and humanity or its successors may well venture beyond our Galaxy and colonize other galaxies near and far.

While we do not have the faintest idea what will happen in 100 million years, there is nothing implausible about such a scenario if we only survive the Precipice. Hence, at stake is the future, which is so much longer than the past. Humanity has emerged 200 000 years ago; if it’s potential extends hundreds of millions or even billions of years into the future, our responsibility is heavy indeed not to squander it.

(AJ December 2021)