Books - Read and Enjoyed

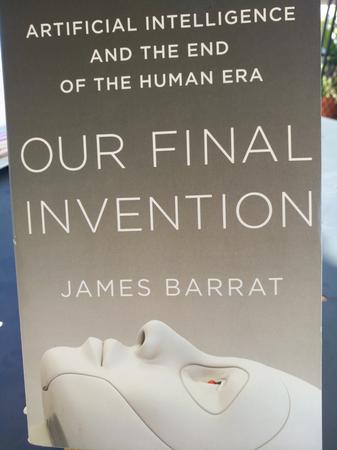

Our Final Invention

Artifical Intelligence and the End of the Human Era

Thomas Dunne Books, 2013

James Barrat

James Barrat’s concern is that, once Artifical Intelligence (AI) has reached a human level, there will be an intelligence explosion resulting in a Artificial Super Intelligence (ASI) that surpasses human capabilities in all relevant aspects. What will be the fate of humanity if this happens? Will humans be allowed to coexist with this ASI?

James Barrat is a documentary filmmaker and he approaches the topic as a journalist. He reviews the positions of leading scientists in the area and interviews most of them.

Among many others the following experts and organization are presented:

-

The Machine Intelligence Research Institute (MIRI) is devoted to develop friendly AI, i.e. that is devoted to the benefit of humanity and will not harm people:

“to develop formal tools for the clean design and analysis of general-purpose AI systems, with the intent of making such systems safer and more reliable when they are developed.” (https://intelligence.org/about/)

As leading figures Michael Vassar and Elezier Yudkovski are presented and interviewed. Friendly AI is the engineering approach to realize Isaac Asimov’s Three Laws of Robotics, but it becomes quickly obvious that this endeavor is complicated by the fact that any Artificial Super Intelligence (ASI) will have a complex goal structure and will be able to trade one goal for another, to weigh long-term goals against short-term gains, and to consider who and how many people are affected by its actions. Also, scenarios where ASIs are developed for military purposes are not unlikely and how to square military objectives with friendly AI will inherently remain a puzzle. Moreover, no fool prove techniques are known to prevent an ASI to modify or temporarily suspend some of its goals.

-

Steve Omohundro is an AI pioneer and now a research scientist at Facebook working on AI-based simulation, and president of the AI think tank Self-Aware Systems.

At Self-Aware Systems we are developing a new kind of software that acts directly on meaning.

(from The Future of Computing: Meaning and Values, by Steve Omohundro, Jan 2012)

In the paper The Basic AI Drives from 2008 Omohundro posits that any ASI will by necessity have several drives whatever its final goal or utility function. The drives described by that paper are

- Self-improvement,

- Rationality, in the sense used in economic theory,

- Preservation of its utility function,

- Prevention of counterfeits of the utility,

- Self-protection, and

- Acquisition of resources.

These drives (instrumental goals called by Nick Bostrom) are likely to be present in an ASI whatever their final goals otherwise.

-

Irving John Good was an English mathematician who worked with Alan Turing during World War II decoding encrypted messages, and then after the war at Manchester University. In 1968 he moved to Virginia Tech in the US where he remained until his death in 2009.

I.J. Good was the first to describe the intelligence explosion in a 1965 paper on “Speculations Concerning the First Ultraintelligent Machine”:

Let an ultraintelligent machine be defined as a machine that can far surpass all the intellectual activities of any man however clever. Since the design of machines is one of these intellectual activities, an ultraintelligent machine could design even better machines; there would then unquestionably be an “intelligence explosion,” and the intelligence of man would be left far behind. Thus the first ultraintelligent machine is the last invention that man need ever make ...(cited from Barrat p 104).

This notion of intelligence explosion informs, till today, all concerns about ASI and in particular about the speed at which ASI will emerge once human level AI has been obtained.

-

The time when the intelligence explosion occurs is today commonly referred to as singularity. Vernor Vinge, a science fiction author, coined this term in a 1993 talk at NASA with the title “The Coming Technological Singularity”. He wrote:

But if the technological Singularity can happen, it will. Even if all the governments of the world were to understand the “threat” and be in deadly fear of it, progress toward the goal would continue. In fact, the competitive advantage — economic, military, even artistic — of every advance in automation is so compelling that passing laws, or having customs, that forbid such things merely assures that someone else will.cited from Barrat p 118.

-

The leading Singularitan is Ray Kurzweil. In his books The Age of Intelligent Machines, The Age of Spiritual Machines, Fantastic Voyage: Live Long Enough to Live Forever. The Singularity Is Near, and others he expounds a very positive perspective for humanity, but he combines the discussion of ASI with development of nanotechnology with the prospect of atom-level engineering. One main hope he entertains is becoming immortal.

On his website kurzweilai.net he announces his new book The Singularity Is Nearer for release in 2022.

-

Benjamin Goertzel is devoted to developing AI in various ways and forms, informing and inspiring several organizations: he is the CEO of the decentralized AI network SingularityNET, a blockchain-based AI platform company, and the chief scientist at Hanson Robotics. Goertzel also serves as Chairman of the Artificial General Intelligence Society and the OpenCog Foundation.

No challenge today is more important than creating Artificial General Intelligence (AGI), with broad capabilities at the human level and ultimately beyond. OpenCog is an open-source software project aimed at directly confronting the AGI challenge, using mathematical and biological inspiration and professional software engineering techniques.(from https://opencog.org/about/)

Throughout the book Barrat speculates where ASI may emerge. His candidates are

- the financial stock market

- in the virus and malware ecosystem

- as a defense and military project

- from research project like OpenCOG or LIDA

-

from a big cooperation that combines AI research with data accumulation like Google or Facebook

E.g. Google cofounder Larry Page said at a London conference called Zeitgeist 2006:

People always make the assumption that we’re done with search. That’ s very far from the case. We’ re probably only 5 percent of the way there. We want to create the ultimate search engine that can understand anything … some people could call that artificial intelligence.… The ultimate search engine would understand everything in the world. It would understand everything that you asked it and give you back the exact right thing instantly.… You could ask “ what should I ask Larry?” and it would tell you.

(cited from Barrat p. 40)

Historian George Dyson mused after a visit at Google:

For thirty years I have been wondering, what indication of its existence might we expect from a true AI? Certainly not any explicit revelation, which might spark a movement to pull the plug. Anomalous accumulation or creation of wealth might be a sign, or an unquenchable thirst for raw information, storage space, and processing cycles, or a concerted attempt to secure an uninterrupted, autonomous power supply. But the real sign, I suspect, would be a circle of cheerful, contented, intellectually and physically well-nourished people surrounding the AI. There wouldn’ t be any need for True Believers, or the downloading of human brains or anything sinister like that: just a gradual, gentle, pervasive and mutually beneficial contact between us and a growing something else. This remains a nontestable hypothesis, for now.

(cited from Barrat p 231)

Barrat’s book is a fascinating tour through the exciting landscape of AI research, and throughout reading one has the impression that we are heading towards truly exciting times; but the outlook is unpredictable and scary.

(AJ September 2021)