Books - Read and Enjoyed

How We Learn

Penguine Books, 2020

Stanislas Dehaene

The book describes the mechanisms of human learning and is informed as much by neuroscience and cognitive science as by computer science. It is in fact surprising how much of Dehaene’s explanations and elaborations are supported by the way machine learning algorithms work. I suspect the reason for this is how we study mammalian brains, what is experimentally accessible and what is not. Two directions of research have a relatively long history and have brought key insights: the study of nerve cells at the microscopic level and behavioral experiments that reveal macro-level mechanisms of our brain.

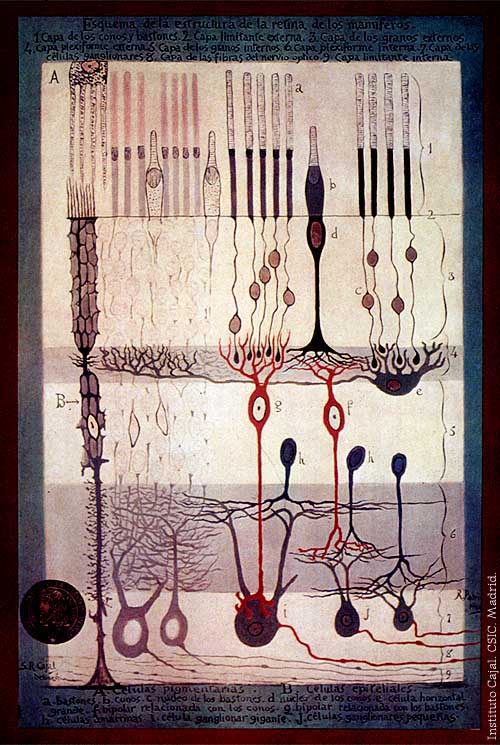

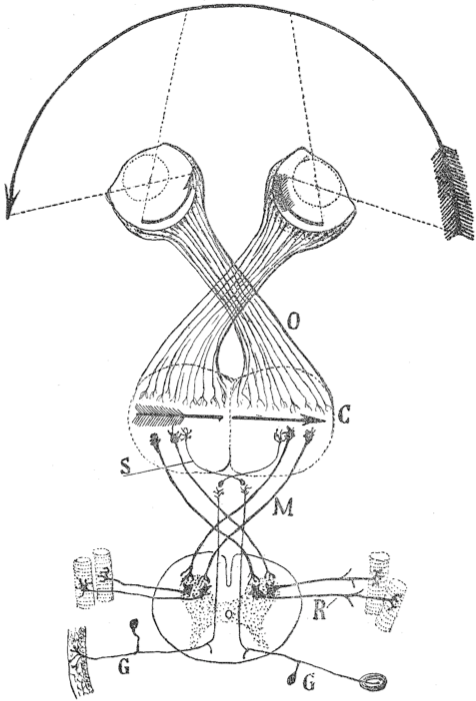

The study of neurons at the cellular level started in earnest with the work of Santiago Ramón y Cajal who earned a Nobel Prize in 1905 for his discovery that the brain was built up of neuronal cells. Equipped with a microscope and an artistic talent he produced numerous drawings that shape our perception of the cellular organization of our brain up to today. Cajal revealed the daunting complexity and variety of nerve cells and discovered that they consist of dendrites, a cell body and an axon, and he speculated about the direction of information flow by adding arrows to his diagrams: from the dendritic tree to the cell body to the axon. Cajal not only discovered that the brain tissue was made up of distinct neural cells but also that they come in contact with each other at points that we today call synapses.

How a message (a “spike”) traverses the syanpitc gap from one cell’s axon to the next cell’s dendrite was studied and explained by Thomas Südhof, and others, many years later. Synapses play a key role in learning because they adapt to the activities of information transfer. Their growth, strengthening, weakening and disappearing are key mechanisms of information storage and learning. They adapt in time spans of minutes, hours, days, weeks and months and keep changing during lifetime. A basic rule of learning, put forward by Donald Hebb, is “if neurons fire together they wire together”, meaning that synaptic connections are strengthened when both the pre-synaptic and the post-synaptic neuron is active at the same time. Due to many studies of synaptic adaptations and anatomical changes of neurons in response to neuronal activities, today we understand fairly well the cellular mechanisms underlying learning, memorization and recall. When neurons, that react to the image of a particular face, are often activated at the same time as neurons representing a name, the connections between these two groups of neurons are strengthened and we associate the face with a the name.

A huge number of clever behavioral experiments have given us detailed insights into a great variety of mental capabilities of the human and mammalian brain. For instance babies are born with an innate capability to count and do approximate arithmetic; they have a number sense. Within the sight of the baby objects are moved behind a curtain, then the curtain is removed and the baby’s reaction is observed. If you move one ball and then another ball behind the curtain, and if there are two balls seen after removal of the curtain, the baby shows no surprise. If there is only one ball or three ball, the baby is very surprised. The same works with larger numbers like 5+5 balls with the expectation of seeing 10 balls. It works for counting, addition and subtracting. If you move 10 balls behind the curtain, then subsequently remove 5 balls, then the baby is surprised if there are still 8 balls behind the curtain. Many experiments have confirmed, that it is the abstract numbers, that babies consider, not other physical properties like the shape, color or size of objects. This innate number sense has also been confirmed in many other mammals. For instance experimenters went to great length to make sure that newborn chickens have not seen any object before the experiments, and they still can already reason about numbers. Behavioral experiments have given us insight about innate capabilities like a number sense, a sense of probabilities, learning languages and recognizing faces. They have shown how we build up episodic memories, how we interact with other people, how we balance long term against short term desires, etc.

While these two lines of investigation have given us an extensive and detailed understanding of the human brain, the level in between seems to be harder to decipher. How do we abstract from 100 pictures of flowers to the concept of flower? How does the neural circuitry correctly predict the trajectory of a ball and direct our body to catch it in flight? How do we estimate that it is safe to cross the street right before a moving care? What is the language of thought, what is its grammar and how do we determine its vocabulary? These and many other feats involve the coordinated activity of huge numbers of neurons in different regions of the brain, the communication of large amounts of information across data highways between brain modules. They require the integration of several senses and specialized brain circuitry into a unified assessment. They require the coordination over periods of time and the building and usage of symbols and concepts at the right abstraction level, not too low and not too high. Many of these mechanisms are still poorly understood and therefore a theory about how they may work can greatly structure the investigation and search for experimental evidence for confirmation and disapproval of hypotheses.

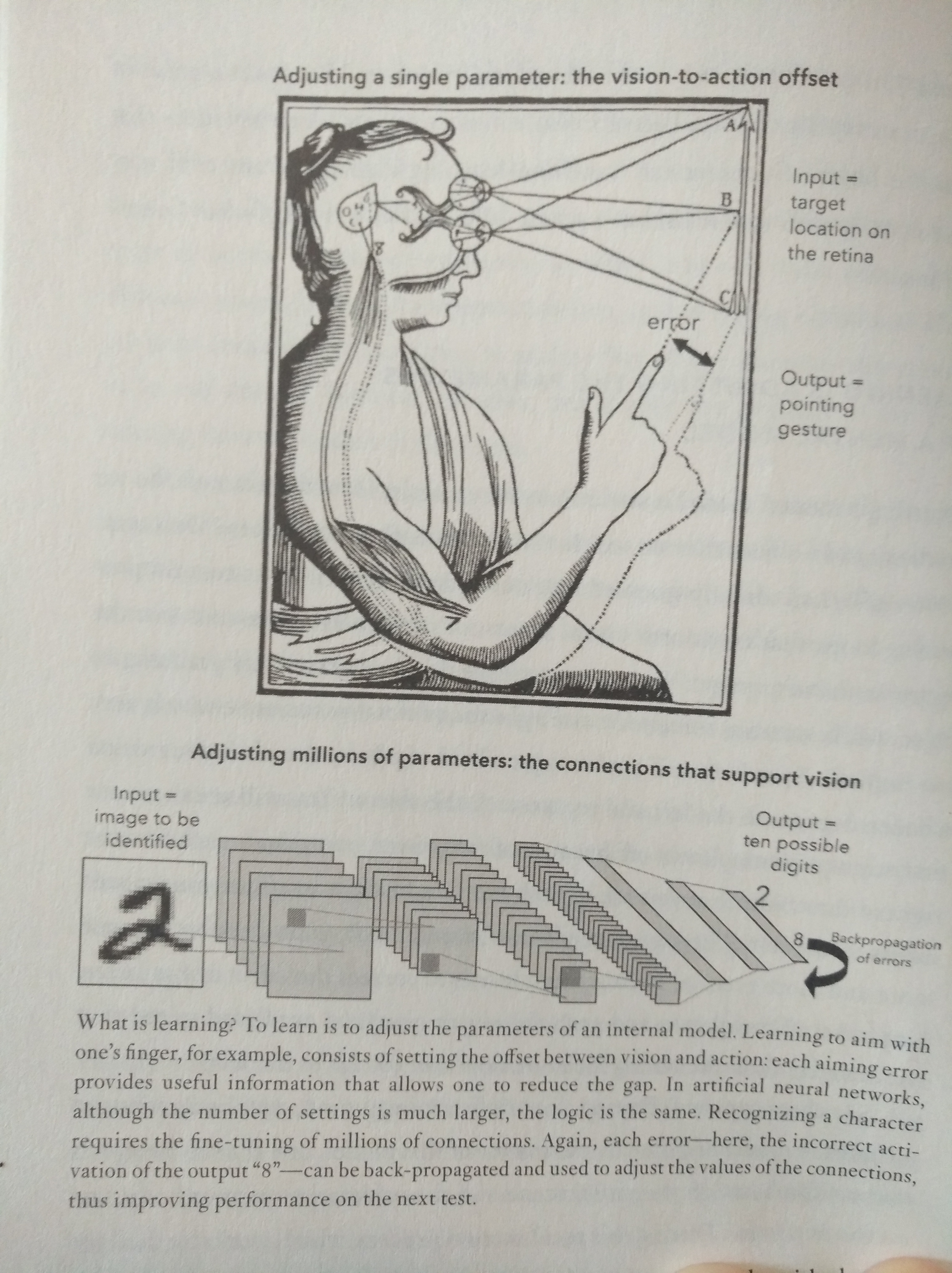

For learning, the rapidly developing field of machine learning in computer science is inspiring a framework for generating hypotheses about how learning in the brain may work. Therefore, I suspect, Dehaene is drawing heavily on the parallels to deep artificial neural networks, a particularly successful branch of machine learning, to contemplate and explain features of learning in the human brain.

Ironically, artificial neural networks have been inspired by natural neural networks more than 60 years ago, but have only in the last ten years emerged as a highly successful branch in machine learning in the form of Deep Neural Networks (DNN). DNNs, as alluded to in the figure here taken from the book, consist of tens or even hundreds of layers of neurons. Each layer’s output is the input of the next layer. The “deep” in DNN refers to the large number of layers. DNNs have been shown to outperform humans in a wide area of tasks like object detection, face recognition, sensor data analysis, medical image analysis, games, transforming of images and videos into fakes, etc. They have a number of features that certainly also play key roles in human learning. For instance, error correction, is equally important in DNNs as in human learning. In DNNs an input (a handwritten “2” in the picture) is processed sequentially by all the layers and a response is generated, which is the recognition of a number in this case. During training of the DNN, the correct answer is then presented to the DNN, and the error is propagated back from the output all the way to the input of the network. During this back-propagation, parameters are adjusted such, that the same image would be more correctly processed the next time. After this training procedure is repeated hundreds or thousands of times with different images, the parameters in the DNN are adjusted such, that it produces correct output for all images in the training set. Moreover, it also performs very well for images that are sufficiently similar to the images in the training set. Hence, the DNN in fact abstracts from the individual images. The larger the training set and the deeper the DNN the more powerful it becomes in terms of abstraction and object recognition. If you are unhappy with the performance of your 50-layer network, simply use a 200-layer network, increase the training set tenfold, run the training and the results will most likely astonish you.

Eight Definitions of Learning

The book starts out with eight definitions of learning:

1) To learn is to form an internal model of the external world

2) Learning is adjusting the parameters of a mental model

3) Learning is exploiting a combinatorial explosion

4) Learning is minimizing errors

5) Learning is exploring the space of possibilities

6) Learning is optimizing a reqard function

7) Learning is restricting a search space

8) Learning is projecting A Priori Hypotheses

These definitions seem to be almost exclusively inspired by computer science, and exhibit little trace from neuroscience or psychology. However, they seem to apply exceedingly well to what is actually happening in the human brain, which lends support to the idea that a computer science inspired theoretical framework of learning can be very fruitful in researching the brain’s capabilities and mechanisms. However, Dehaene is quick to point out, that there are a number of routine accomplishments of the human brain, that machine learning algorithms cannot replicate. For example, DNNs need thousands or, better, hundreds of thousands of examples in the training session, while humans can learn very effectively from few, or even only one given example. DNNs are most effective when the correct answer for the training set is known, which is called supervised learning. Human learning is mostly unsupervised, and very effective in that. Humans learn often by analogy, transferring rules and patterns from one domain to another. If you play a lot of chess, your chess game strategies may inform you when planing your career. When you do a lot of high effort hiking, you may take its lessons about ups and downs, effort and sweat, planning and perseverance into other domains like starting a business. Machine learning algorithms cannot do this today and are not close to it. Also, humans are good in penetrating new territory and learning the regularities of a new domain even if they know nothing or little about it at the outset. Human “learning is inferring the grammar of a domain”, writes Dehaene. Again, computer science have yet to come up with algorithms that can do that.

As a result, research in artificial intelligence and cognitive science is mutually inspiring. Computer scientists see in the example of the brain, what is possible, using them to find ways to accomplish similar behavior. Neuroscientists can hypothesize and test if mechanisms developed successfully for machine learning also are at work in the human brain.

Memory in the Brain

Dehaene describes our current understanding of memory structures in the brain as follows.

Working memory

consists of activity patterns of neurons. There is no permanent, physical change in the brain underlying working memory. If the current pattern of activity changes, the current content of active memory is lost. The amount of information in working memory is very limited; only few symbols can be stored there. The duration is also limited and after a few seconds the content in working memory is fading away.

Episodic memory

is a weird thing. Episodic memory gives us identity, history and stability over time. It seems highly efficient. Streams of events, images and scenarios are continuously recorded without effort. It seems also highly effective giving priorities to important events that are remembered years later while unimportant events are quickly fading into oblivion. The hippocampus, a brain module below the cortex, is the gate keeper to episodic memory recording the unfolding episodes of our lives.

Neurons in the hippocampus seem to memorize the context of each

event: they encode where, when, how, and with whom things

happened. They store each episode through synaptic changes, so we

can remember it later. The famous patient H.M., whose hippocampi

in both hemispheres had been obliterated by surgery, could no

longer remember anything: he lived in an eternal present, unable

to add the slightest new memory to his mental biography.

(p 91)

But H.M. could still remember and recall his life before the surgery. So while the hippocampus records episodic memory, it is not necessarily stored there. Also, H.M. could still learn new skills which he could use later on. But he did not remember that, when and how he has acquired a new skill.

Semantic memory

While the hippocampus is key in recording new memories, they are stored throughout the brain.

At night, the brain plays them back and moves them to a new

location within the cortex. There, they are transformed into

permanent knowledge: our brain extracts the information present

in the experiences we lived through, generalizes it, and

integrates it into our vast library of knowledge of the

world. After a few days, we can still remember the name of the

president, without having the slightest memory of where or when we

first heard it: from episodic, the memory has now

become semantic. What was initially just a single episode was

transformed into long-lasting knowledge and its neural code moved

from the hippocampus to the relevant cortical circuits.

(p 91)

Procedural memory

is about skills like tying shoes, reciting a poem, calculating, juggling, playing the violin, or cycling.

When we repeat the same activity over and over again [...] neurons

in the cortex and other subcortical circuits eventually modify

themselves so that information flows better in the

future. Neuronal firing becomes more efficient and reproducible,

pruned of any parasitic activity, unfolding unerringly and as

precisely as clockwork. This is procedural memory: the compact,

unconscious recording of patterns of routine activity.

(p 91 ff)

The hippocampus plays no or only a minor role; that is why H.M. could learn new skills like writing backwards while looking at his hand in a mirror. An important host of procedural memory is a set of subcortical neural circuits called “basal ganglia”.

Learning in the Brain

Cells, dentrites, synapses

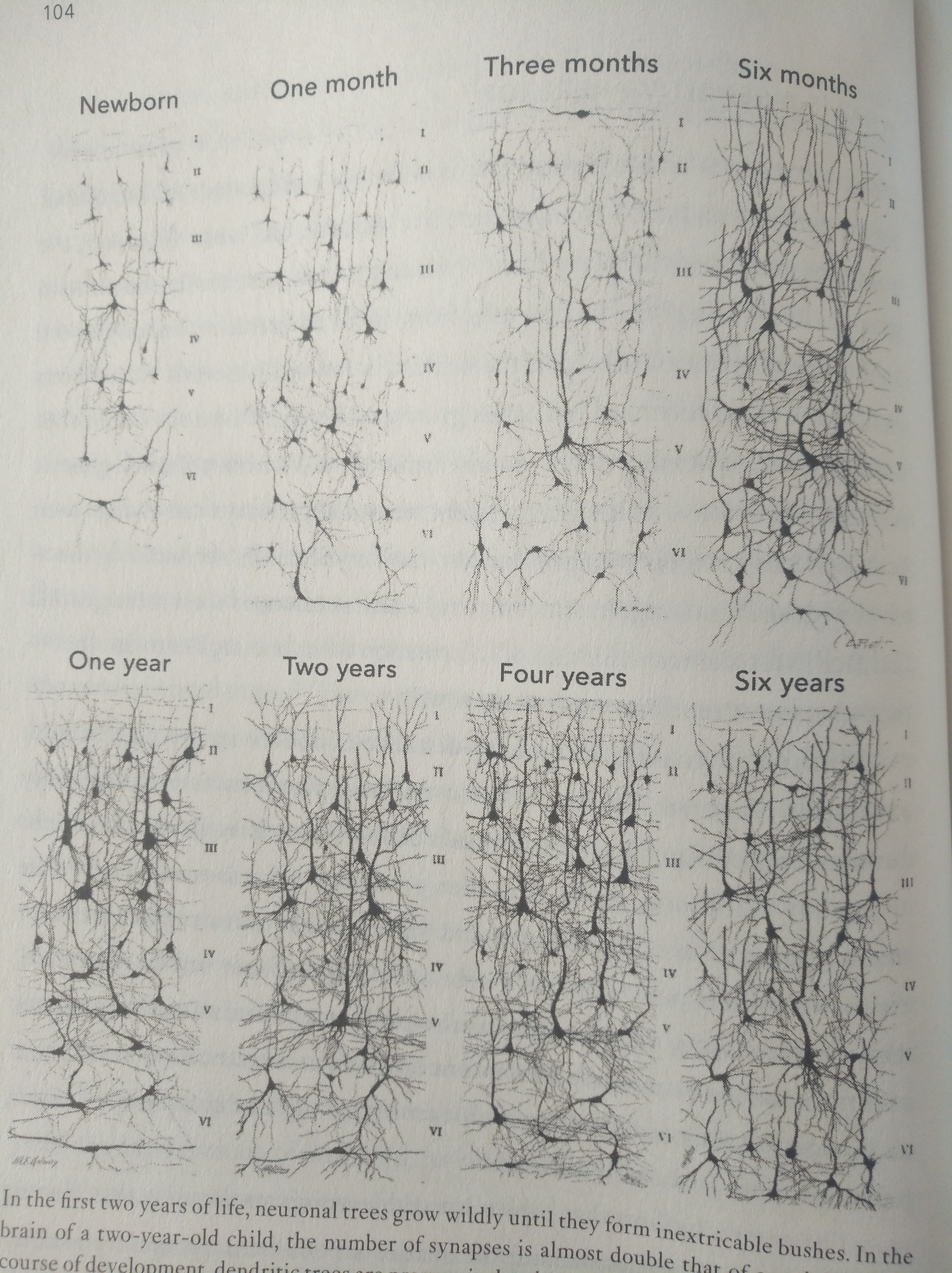

At the neural level learning happens foremost through adapting connections between neurons by strengthening and weakening existing synapses, building new and removing unnecessary synapses. Also, the dendrite tree of neurons can grow and shrink.

During the growth of the embryo, the baby and the child the neural architecture is built according to a relatively fixed scheme. The brain has an innate architecture with many specialized modules, e.g. for the visual pathways, the auditory sensors, motor control, emotion management, face recognition, language understanding and production. During this growth period the neural circuits adapt to specific needs and conditions. When some neural circuits are damaged, others can jump in and assume their task to some extent. The book relates the story of the child Nico that lacked almost the entire right hemisphere and the neurons in the intact left hemisphere assumed many of the tasks that were usually located in the right hemisphere, to the extent that the Nico developed artistic talents in drawing and got a university diploma in IT. Hence, during growth the brain shows great capacity to adapt, but it is best during the sensitive period of the corresponding brain module, and is greatly reduced afterwards.

in many brain regions, plasticity is maximal only during a

limited time interval, which is called "sensitive period." It

opens up in early childhood, peaks, and then gradually decreases

as we age. The entire process takes several years and varies

across brain regions: sensory areas reach their peak around the

age of one or two years old, while higher order regions such as

the prefrontal cortex peak much later in childhood or even early

adolescence. What is certain, however, is that as we age,

plasticity decreases, and learning, while not completely frozen,

becomes more and more difficult.

(p 103)

Four pillars of learning

While learning is implemented at the cellular level, at the dendrites and synapses, there are several high level mechanisms that steer what and when we learn. Dehaene highlights four pillars of learning: attention, active engagement, error feedback, consolidation.

Attention

is key in selecting which information to process.

In cognitive science, "attention" refers to all the mechanisms by

which the brain selects information, amplifies it, channels it,

and deepens its processing. These are ancient mechanisms in

evolution: whenever a dog reorients its ears or a mouse freezes

up upon hearing a cracking sound, they're making use of

attention circuits that are very close to ours.

(p 147)

Essentially, attention is a resource allocation mechanism. The information processing system of the brain has limited resources and there is always an overabundance of in-flowing information. Attention prioritizes information and steers the decision making process.

Active engagement

Humans, and other animals, are devoted experimenters. We, mostly unconsciously, formulate hypotheses and put them to test. When a child drops a spoon ten times in a row, it conducts experiments which are a much more effective way to learn about the physics of gravity, solid objects, spoons and sounds than by passively watching objects fall as a coincidence. It is so much faster to collect relevant information by directed experiments than by passive observation. Children do little else than experimenting during their wake time. They do experiments to learn about the physics of the world but also to learn about the social interaction between humans. Even as adults we often conduct experiments more or less unconsciously when learning a new skill, driving a new car or starting a new job.

Error feedback

Without feedback, learning is impossible. If we do not know when a perceived categorization is correct and when an action leads to a desired result, we cannot improve.

The brain seems to continuously make predictions at all levels. Some claim, that is about the only thing the brain does: predictions and improving its predictions (See for instance Jakob Howhy The predictive mind or The Bayesean Brain).

the brains learns only if it perceives a gap between what it

predicts and what it receives. No learning is possible without an

error signal: "Organisms only learn when events violate their

expectations"[cited from Rescorla and Wagner 1972 "A theory of

Pavlonian conditioning"]. In other words surprise is one of the fundamental

drivers of learning.

(p 201)

Consolidation

is the process of making an acquired knowledge or skill automatic and unconscious. While learning happens most effectively when the conscious mind pays attention to the subject of learning, consolidated knowledge or skill becomes unconscious and automatic and highly efficient.

It seems that sleep plays a crucial role in the consolidation process. In experiments with rats the role of sleep could be mapped in detail.

The rat's brain thus replays, at high speed, the patterns of

activity it experienced the day before. Every night brings back

memories of the day. And such replay is not confined to the

hippocampus, but extends to the cortex, where it plays a

decisive role in synaptic plasticity and the consolidation of

learning. Thanks to this nocturnal reactivation, even a single

event of our lives, recorded only once in our episodic memory, can

be replayed hundreds of times during the night. [...] Such memory

transfer may even be the main function of sleep. [...] Indeed, in

the cortex of a rat that learns to perform a new task, the more a

neuron reactivates during the night, the more it increases its

participation in the task during the following day. Hippocampal

reactivation leads to cortical automation.

(p 227)

Stanislas Dehaene is a cognitive neuroscientist with a training in mathematics and experimental psychology. He is a professor at the Collège de France and, since 1989, the director of INSERM Unit 562, “Cognitive Neuroimaging”. He has written several other popular science books that are worth reading:

Consciousness and the Brain (2014).

(AJ Juli 2021)